are LLMs really thinking?

Posted on October 23, 2024 in thoughts • 1 min read

Let's take a simple example: when an LLM tells us that a year has 365 days, it's not because it truly understands the concept of time or the astronomy behind it or the Gregorian calendar. Rather, it has seen the phrase "a year has 365 days" repeated so many times in its vast training data that this becomes the highest probable answer.

It's less about understanding and more about pattern recognition.

Yet another example - Imagine you are at a dinner party with a group of friends and you all have different preferences for dessert. Each person gets to vote for their favorite dessert, and the dessert with the most votes is chosen as the final option for everyone. This is similar to how LLMs work, where every instance of an answer in the training data is like a vote determining the most probable answer.

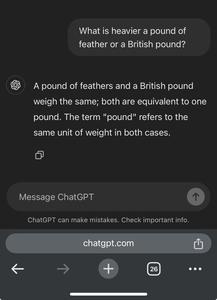

This voting system works great as long as the question is within the bounds of the training data, but it fails miserably when you ask it a more human question, have a look at my query below.

Asking, again, the same question as in my previous post is AI smart enough?